We all make judgments of probability and depend on them for our decision-making.

However, it is not always obvious which judgments to trust, especially since a range of studies suggest that these judgments can be less accurate than we might hope or expect. For example, scholars have argued that at least 4% of death sentence convictions in the US are false convictions, that tens or even hundreds of thousands of Americans die of misdiagnoses each year, and that sometimes experts can be 100% sure of predictions that turn out to be false 19% of the time. So we want trustworthy judgments, or else bad outcomes can occur.

How, then, can we determine which judgments to trust—whether they are our own or others’? In a paper I recently published (which is freely available here), I argue for an answer called “inclusive calibrationism”—or just “calibrationism” for short. Calibrationism says trustworthiness requires two ingredients—calibration and inclusivity.

The First Ingredient of Trustworthiness: Calibration

The calibrationist part of “inclusive calibrationism” says that judgments of probability are trustworthy only if they are produced by ways of thinking that have evidence of their calibration. Here, “calibration” is a technical term that refers to whether the probabilities we assign to things correspond to the frequency with which those things are true. Let us consider this with an example.

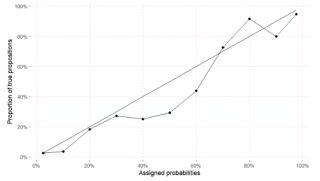

Below is a graph which depicts the calibration of a forecaster from the Good Judgment project—user 3559:

Good calibration of a good forecaster

Source: John Wilcox

The graph shows how often the things they assign probabilities to turn out to be true. For example, the top right dot represents all the unique events which they assigned a probability of around 97.5% to before they did or didn’t occur: that Mozambique would experience an onset of insurgency between October 2013 and March 2014, that France would deliver a Mistral-class ship to a particular country before January 1st, 2015 and so on for 17 other events. Now, out of all of these 19 events which they assigned a probability of about 97%, it turns out that about 95% of those events occurred. Likewise, if you look at all the events this person assigned a probability of approximately 0% to, it turns out that about 0% of those events occurred.

In this case, this person has a relatively good track record of calibration because the probabilities that they assign to things correspond (roughly) to the frequency with which those things are true.

And this is the case even when those things concern “unique” events which we might have thought we couldn’t assign probabilities too. After all, a particular insurgency either would or would not occur; it’s a unique event, and so it’s not obvious we can assign it a numerically precise number, like 67%, that—as it turns out—reflects the objective odds of its occurrence. But it turns out that we can—at least if we think in the right ways.

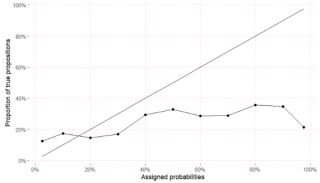

Yet not all of us are so well calibrated. Below is a particular individual, user 4566, who assigned probabilities of around 97% to things which were true merely 21% of time, such as Chad experiencing insurgency by March 2014 and so on.

Miscalibration of a poor forecaster

Source: John Wilcox

Studies have shown people can be more or less calibrated for a range of domains, including medical diagnoses and prognoses, general knowledge about past or current affairs, geopolitical topics—virtually anything.

Calibrationism then says that judgments of probability are trustworthy only if we have evidence that they are produced by ways of thinking that are well calibrated—that is, by ways of thinking that look more like the first forecaster’s calibration graph than like the second forecaster’s calibration graph.

Of course, for many kinds of judgments, we lack strong evidence of calibration, and we then have little grounds for unquestioning trust in those judgements of probability. This is true in some parts of medicine or law, for instance, where we have some evidence of inaccurate judgments which can lead to misdiagnoses or false convictions at least some of the time, even if we have perfectly good judgments the rest of the time.

But in other contexts, we simply lack evidence either way: Our judgments could be as calibrated as the first forecaster or as miscalibrated as the second, and we have no good reasons to tell firmly either way.

The calibrationist implication is that in some domains, then, we need to measure and possibly improve our calibration before we can fully trust the judgments of probability which we have in that domain.

The good news, however, is that this is possible, as I discuss in my book. For example, some evidence (e.g. here and here) suggests we can improve our calibration by drawing on information about statistics or the frequency with which things have happened in the past. For instance, we can better predict a recession in the future if we look at the proportion of the time that recessions have occurred in similar situations in the past. We can similarly use statistics like these to determine the probabilities of election outcomes, wars, a patient having a disease and even more mundane outcomes like whether someone has a crush on you.

Decision-Making Essential Reads

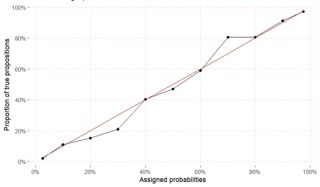

The other good news is that some people are well calibrated, meaning that they can provide us with trustworthy judgments about the world. For example, take user 5265 from the Good Judgment’s forecasting tournament. In the first year of the tournament, their judgments were well calibrated, as the below graph depicts:

A well calibrated forecaster in year 1 of a forecasting tournament

Source: John Wilcox

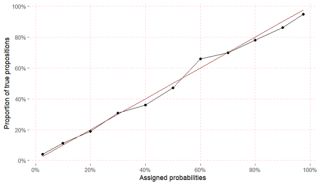

If we were in the second year of the Good Judgment tournament, and we were about to ask this person another series of questions, we could then have similarly inferred the calibration and trustworthiness of their judgments about the future. And in fact, that is exactly what we see when we look at their track record of calibration for the second year of the tournament below:

The same well calibrated forecaster in year 1 of the forecasting tournament

Source: John Wilcox

More generally, the evidence demonstrates track records of accuracy are the biggest indicator of someone’s accuracy in other contexts—and better indicators than education level, age, experience, or anything else that has been scientifically tested.

So track records of calibration are one important ingredient of trustworthiness, but it’s not the only one, as I will discuss in Part Two.